How JioHotstar handled 60 Cr views during Ind vs Pak match(Feat. my wifey’s reactions!)

It’s crazy. I witnessed Kubernetes (K8s) autoscaling in action on 23rd Feb 2025, while watching the India vs Pakistan match with my wife. Everything was perfect — HD clarity, smooth streaming — until Virat Kohli started approaching his century. Suddenly, the resolution dropped, and there was a bit of lag.

My wife, slightly annoyed, asked,

“Why did the HD resolution disappear suddenly and start blurring?”

But the tech guy inside me? I was fascinated. “Dear, just look at how gracefully autoscaling is kicking in at the K8s layer! This is what handling 60+ crore concurrent users looks like.”

This wasn’t just a cricket match; it was a real-time stress test for JioHotstar’s infrastructure. And honestly? They handled it brilliantly.

Breaking Down the Madness: How JioHotstar Scaled for 60 Cr Concurrent Users

To put this into perspective, streaming platforms struggle when traffic spikes beyond 5–10 million users. What Hotstar did with 600 million+ concurrent viewers is nothing short of an engineering marvel.

Let’s break it down step by step.

1. Autoscaling at the Kubernetes Layer: The Heart of the Operation

Hotstar runs on Kubernetes (K8s), which provides automated horizontal pod scaling (HPA).

- When Kohli neared his century, millions of users joined in real-time, increasing requests per second (RPS).

- Kubernetes’ HPA kicked in, dynamically spawning new pods to handle the surge.

- Load balancers ensured that requests were evenly distributed across available resources.

2. CDN and Edge Caching: The First Line of Defense

With so much traffic, the content had to be served from the closest possible edge location to avoid overwhelming the origin servers.

- Akamai, AWS CloudFront, and other CDNs cached and served popular content.

- However, when cache hit ratios dropped, some requests had to be served from the origin, slightly increasing latency.

3. Adaptive Bitrate Streaming (ABR): Keeping the Stream Alive

That resolution drop my wife noticed? That was Adaptive Bitrate Streaming (ABR) doing its job.

- When the backend detected congestion, it dynamically reduced video quality to prevent buffering.

- This ensured that even during peak traffic, the stream didn’t crash completely.

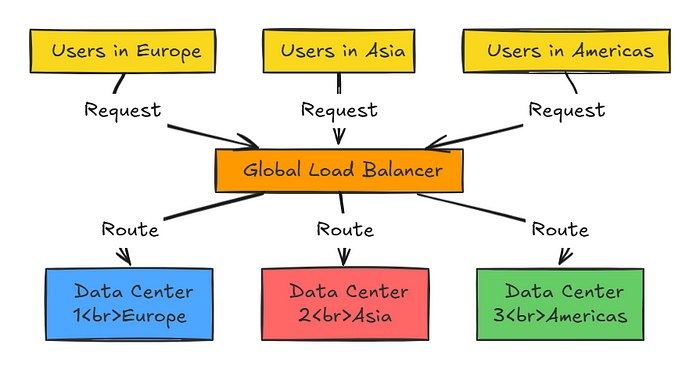

4. Load Balancing at a Massive Scale

The traffic wasn’t just hitting one data center — it was being intelligently distributed.

- Hotstar’s backend is likely built on multi-region cloud architecture, using AWS, GCP, and private data centers.

- Global Traffic Managers (GTM) and DNS Load Balancers helped distribute users across different regions.

- If one region got overloaded, requests were rerouted to a less busy data center.

5. WebSockets for Real-Time Updates

Handling chat messages, live scores, and reactions is another beast. Hotstar likely uses WebSockets instead of HTTP polling for real-time updates, reducing unnecessary network load.

- With millions sending emojis and live comments, WebSockets ensured messages were delivered efficiently.

- A fallback mechanism probably switched to long polling in case of WebSocket failures.

Lessons from JioHotstar’s Scaling Masterpiece

What JioHotstar achieved on 23rd Feb 2025 wasn’t just about streaming a cricket match — it was a case study in cloud scalability.

- K8s Autoscaling: Automatically adjusted capacity based on demand.

- CDN Optimization: Cached content at the edge to reduce origin load.

- Adaptive Bitrate Streaming: Ensured uninterrupted streaming even during spikes.

- Multi-Region Load Balancing: Prevented a single point of failure.

- WebSockets for Real-Time Engagement: Handled millions of concurrent messages.

It’s not every day that cricketers and DevOps engineers share the same challenge — handling massive concurrent traffic!

So, the next time you see a slight resolution drop during a high-traffic event, don’t complain. Instead, appreciate the brilliance of the engineering behind the scenes!

Happy Reading ⚡⚡⚡